Issue #15 – Five Strategies to Stop AI-Powered Impersonation Scams

Polar Insider | Issue #15 | Week of 11 June 2025

🧊 Introduction

Hi there,

Welcome to this week’s edition of Polar Insider. This issue explores how AI-generated voice and video deepfakes are revolutionizing impersonation scams and how your financial crime team can build a resilient first line of defence.

Here’s what you’ll find inside this issue:

📌 Top Story – Five Strategies to Stop AI-Powered Impersonation Scams

🔎 Case Study – CEO Voice Deepfake: The $25M Phone Call

🌍 Regulatory Roundup

🧰 Compliance Toolkit – Red flags, tools, and training resources

📌 Top Story

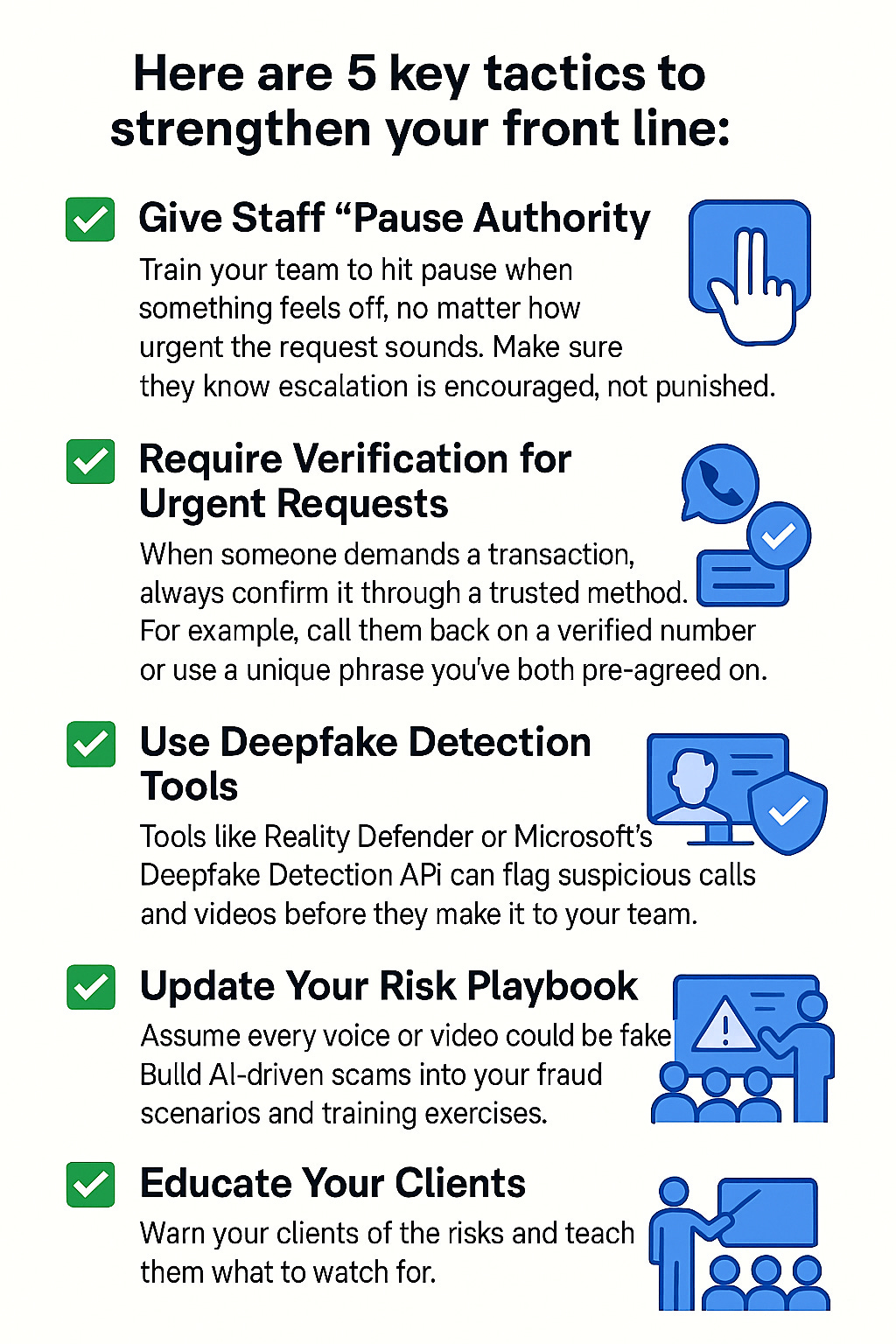

Five Strategies to Stop AI-Powered Impersonation Scams

AI has entered the fraud toolkit and it's terrifyingly effective.

Thanks to rapid advances in generative AI, criminals can now clone voices, mimic facial movements, and generate convincing synthetic videos in minutes. This makes impersonation scams more believable, scalable, and harder to detect. For financial institutions, that means frontline staff are now the first (and often last) line of defence.

What’s Happening

AI-powered impersonation scams typically involve:

Voice cloning: With just a short audio clip from YouTube, voicemail, or a conference, fraudsters can recreate someone’s voice.

Deepfake videos: Tools like Synthesia or HeyGen create lifelike videos of CEOs or clients asking for urgent transfers or sensitive access.

Real-time deception: Criminals use deepfake voice on live calls to impersonate executives, family members, or vendors, pressuring staff to act quickly.

The Risk to Financial Institutions

Internal fraud pressure: Employees, especially in customer service, payments, and relationship management may be manipulated into executing urgent transactions or bypassing controls.

Client trust damage: Wealthy or high-profile clients are being directly targeted with fake calls from “their banker.”

False confidence: Traditional identity verification tools (caller ID, video call requests) are increasingly vulnerable to AI manipulation.

🧠 Pro Tip: Focus less on whether a message "sounds right" and more on process trust. Are proper controls being followed, regardless of who is asking?

🧠 Pro Tip: Focus less on whether a message "sounds right" and more on process trust. Are proper controls being followed, regardless of who is asking?

🔎 Case Study

The $25M CEO Deepfake Scam

In early 2024, a multinational company with offices in Hong Kong and the UK fell victim to a sophisticated deepfake scam. An employee received a video call from someone who looked and sounded exactly like their London-based CEO. The “CEO” urgently asked the employee to execute multiple transfers for a confidential acquisition.

What Happened

Pretext: The fake CEO referenced actual internal company details scraped from emails and LinkedIn.

Video Call: The employee participated in a Teams call with what appeared to be a full boardroom. Deepfake avatars and synthetic voices carried out the charade.

Execution: Over a period of days, the employee transferred a total of $25 million USD to multiple overseas accounts.

Discovery & Aftermath

The real CEO discovered the issue only after finance flagged unusual transactions during reconciliation.

Investigators concluded that a criminal syndicate used deepfake generation tools alongside social engineering to clone the CEO and other executives.

No employees were charged, but the company instituted sweeping changes to its transaction verification protocols and staff escalation training.

Key Lessons for Financial Crime Teams

🚨 Contextual Awareness: Even realistic video/audio should not override standard verification checks.

🔐 Protect Internal Data: Criminals used leaked org charts, email footers, and public staff directories to build convincing narratives.

🧱 Escalation Culture: Encourage staff to delay or question even high-level instructions if something feels off.

This case illustrates why frontline training is no longer a compliance luxury, it's a cybersecurity necessity.

🌍 Regulatory Roundup

Global Authorities Turn Their Focus to Deepfake & AI Fraud Risks

As AI-generated impersonation scams surge, regulators are stepping in to address emerging threats to financial systems and digital identity frameworks.

United States

SEC & FINCEN Joint Advisory (April 2025): Warned financial institutions of “synthetic identity fraud driven by deepfake technologies.” Urged banks to adopt biometric fraud detection and multi-channel identity checks.

FTC Deepfake Disclosure Act (Proposed): Would require clear labelling of synthetic content in financial advertising and communications.

United Kingdom

FCA Consultation on AI Risk Governance: Set to mandate real-time deepfake detection for regulated financial firms handling voice-verified transactions.

National Cyber Security Centre (NCSC) issued an alert identifying AI-based impersonation as a Tier 1 social engineering threat, recommending firms update staff training and verification protocols.

European Union

AI Act (Finalised March 2025): Categorizes deepfake impersonation tools as “high-risk AI applications.” Financial firms using or exposed to such tech must implement strict governance and explainability controls.

ESAs Joint Opinion: The European Supervisory Authorities emphasized the risk of “identity deception via generative AI” in AML/CFT compliance workflows.

Asia-Pacific

Monetary Authority of Singapore (MAS) launched an AI Trust Framework urging financial institutions to validate biometric security systems against synthetic voice and face inputs.

AUSTRAC is consulting on adding “synthetic impersonation” red flags to its SMR (Suspicious Matter Reporting) guidance following several domestic business email compromise (BEC) cases with AI elements.

Key Message:

Global regulators are no longer treating deepfake scams as science fiction. From the SEC to AUSTRAC, authorities are pushing institutions to adopt AI-aware controls, improve staff training, and audit identity verification processes for resilience in an age of synthetic deception.🧰 Compliance Toolkit

Enhance your defences with these resources:

🔎 Deepfake Detection Tools

• Reality Defender – browser extension for screening fake audio/video📎 Link → http://realitydefender.com/

• Deepware Scanner – scans files for AI-generated content

📎 Link → https://scanner.deepware.ai/

📘 Internal Training Materials

• FINRA’s 2024 Guide to Deepfake Fraud in Financial Services📎 Link → FINRA Regulatory Notice 24-09

• CISA’s Toolkit for AI Threat Awareness (updated April 2025)📎 Link → CISA Cybersecurity Awareness Program Toolkit

• “Pause Authority” Playbook – Internal escalation flowchart template💡 Client Communications Pack

• Email & SMS templates warning clients of impersonation risks

• FAQ: “Did my banker really call me?”💬 Quote of the Week

"AI isn’t just an opportunity—it’s a weapon. The sooner your frontline treats every voice and face as potentially synthetic, the better your institution’s odds of surviving the next wave of fraud."

— Sarah McDowell, Director of Financial Crime, FinTech Australia🎁 Bonus for Subscribers

Don’t forget to download your copy of the 2025 Financial Crime Regulatory Tracker (USA, UK, AU).

Stay on top of AML requirements and enforcement trends globally.👉